Writing a bash-like shell in C

I learned and internalized a number of important software concepts by working on the “minishell” project at 42 school.

Automatically generated audio:

Many people working in or interested in tech rely on a shell, the most popular one being bash, followed by zsh, to interact with their computer. What better way to deeply understand the shell than coding one yourself?

The “minishell” project is often cited as one of the trickiest projects in the entire 42 curriculum. Here’s a list of the main things I learned while working on it:

- Writing a garbage collector (and understanding its pros and cons)

- How the shell takes input from the command line, parses it, and executes it

- How command pipelines and file redirections work

- Process creation, management, and communication

- The logic behind shell exit statuses

- How the shell handles signals, e.g.,

SIGINTsent withCtrl-CandSIGQUITsent withCtrl-\ - Effective collaboration using Notion and GitHub

- Good documentation of code

Setup

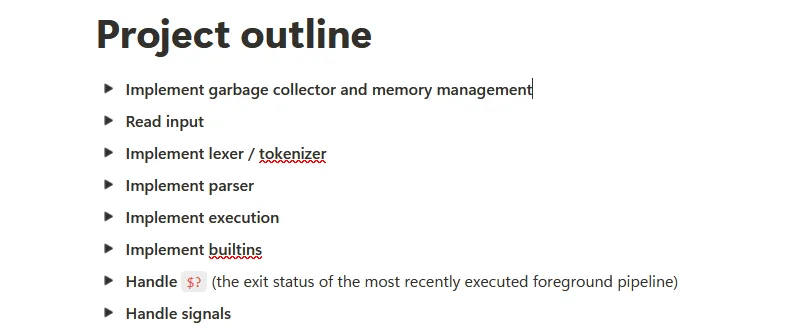

This was a group project, and I was honored to partner with Robert. We used Notion as our primary collaboration tool. While GitHub does offer project management features, Notion seemed better suited in this particular case. Notion was where all the relevant brainstorming notes went. We created a detailed project overview, listing step by step the overall logic of the program, what the different parts of the program would be, and what each part would achieve. Here is what the Project Outline page on Notion looked like (each section is expandable in the original Notion page):

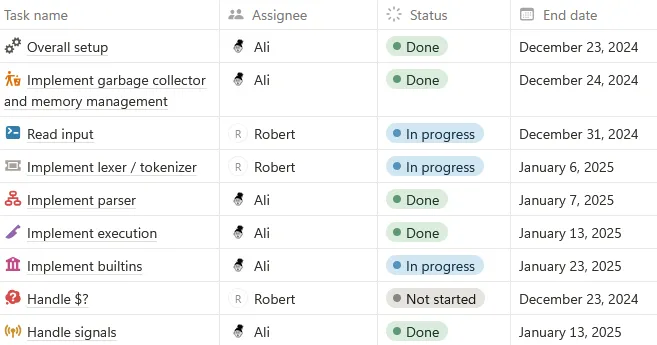

Using this, we came up with a task list. This is what it looked like:

In addition, we had a Notes section on Notion where we put any other ideas and thoughts of interest.

GitHub is where our code lived, so we did make use of GitHub’s collaboration features. E.g., we set up rules in the repository’s settings so that changes couldn’t be directly pushed to the main branch; all work had to be done on separate branches, and then a pull request created to merge with main. Since we decided to look over each other’s work before it was merged, we also enabled the rule to require approval before merging, ensuring the person who created the pull request couldn’t merge it directly. And, of course, it’s important to require branches to be up-to-date before merging.

Below I will explain the key features of our work. The code can be seen in full here.

The program

Overall logic

We implemented the core parts of bash, without recreating its entire functionality. For example, we implemented the handling of file redirections (<, <<, >, >>), environment variables (accessed with $ and the env command), and any number of pipes (|). But we did not implement background processes (&), wildcards (*), or other command chaining and grouping functionality (e.g., ;, &&, ||, ()).

Here is how our shell program works at a high level:

- Input reader: The user is given a prompt to enter commands; the command history is saved and can be accessed with arrow keys.

- Tokenizer: The string (line of text) inputted by the user is split by spaces, except for parts within quotes (single or double).

$followed by an environment variable name or?(last exit status) is expanded within double quotes or unquoted strings. - Parser: The parser’s job is to understand what each token means: Is it a command name, command argument, filename, special character, etc.?

- Execution: Child processes are created to execute each command. File redirections and command pipelines are handled. The exit status of the final command in a pipeline is set accordingly.

- Signals: The user can send

SIGINT(Ctrl-C) orSIGQUIT(Ctrl-\) at any time, and the shell reacts appropriately depending on its current state.

All of this was done without using any global variables, except one for the very specific task of indicating the current signal (SIGINT or SIGQUIT), if received.

External functions used

A limited number of external/library functions were permitted and used. Most of the functionality was coded using our own functions. Here is a table of library functions used, along with the associated library.

| Library | Functions from this library used in our program |

|---|---|

GNU Readline Library - readline.h | readline, rl_clear_history, rl_on_new_line, rl_replace_line, rl_redisplay, add_history |

Standard Library - stdlib.h | malloc, free, exit, getenv |

UNIX Standard - unistd.h | write, access, close, read, fork, execve, pipe, dup2, unlink, chdir, getcwd |

Standard Input/Output - stdio.h | printf, perror |

Signal Handling - signal.h | sigaction, sigemptyset, sigaddset |

Process Control - sys/wait.h | waitpid |

Directory Operations - dirent.h | opendir, readdir, closedir |

File Control - fcntl.h | open |

Writing a garbage collector

C is notorious for its cumbersome memory management. I decided to create a garbage collector, which helped reduce the headache of manual memory tracking and allowed us to focus more on the shell’s logic. At the same time, it was a great learning opportunity to think through the process of automating memory management, often taken for granted in higher-level languages. This garbage collector was designed to have minimal performance overhead in our context, and I believe its advantages far outweighed its costs.

The advantages of the garbage collector in our case were:

- Eliminating the risk of double

free(a common and dangerous error). - Eliminating the risk of memory leaks.

- Removing the need to manually check for

mallocfailure after every call. - Eliminating the risk of double

closefor opened file descriptors. - Eliminating the risk of forgetting to close file descriptors.

This helped ensure that our program cleaned up after itself properly, rather than relying solely on the operating system reclaiming resources upon exit (which can mask leaks during development, even if valgrind catches them later).

The main idea is this: Two linked lists are maintained—one containing all pointers allocated with malloc (that must eventually be freed) and the other containing all file descriptors opened (that must eventually be closed).

The key functions I wrote for this purpose are gc_malloc, gc_free, gc_open, gc_close, and gc_exit. For cases where an external function returns a dynamically allocated (malloc’d) pointer (e.g., readline()), there are also gc_add_to_allocs and similarly gc_add_to_open_fds (for FDs obtained outside gc_open).

So, throughout the program, instead of directly using malloc, we used gc_malloc, which performs these steps:

- Call

malloc. - Check for

mallocfailure: IfmallocreturnsNULL, free all previously tracked memory, close all tracked file descriptors using the garbage collector’s cleanup mechanism, and exit the program gracefully (as memory allocation failure often indicates a critical issue). - Add the allocated pointer to a linked list: This list tracks dynamically allocated memory that hasn’t yet been freed via the garbage collector.

Anytime we were done with some allocated memory and wanted to free it, instead of using free, we used gc_free. This function looks for the given pointer in the linked list, frees the pointer using free, and then removes the corresponding node from the list. This prevents double free errors, even if gc_free is accidentally called again on the same pointer.

gc_open and gc_close follow a very similar logic to gc_malloc and gc_free but deal with file descriptors (integers) instead of pointers. Interestingly, the same linked list structure could be used for both, storing file descriptors cast to void* and casting them back to int before calling close.

The gc_exit function is called whenever we intend to exit the program. This function ensures that all pointers and file descriptors tracked by our garbage collector lists are cleaned up (freed and closed, respectively) before the program terminates using the standard exit function with the appropriate exit status.

Reading user input

User input is read and history maintained using the GNU Readline library, which is also used by bash itself. We use the library’s readline function to display a custom prompt (“minishell$”), after which the user can enter their command line. Standard Emacs-like keybindings are available (e.g., Ctrl-A, Ctrl-E, Ctrl-W, Ctrl-U, Ctrl-L). The readline library’s add_history function is called each time a valid line from the user is received, allowing command history access via the up and down arrow keys.

Since the line returned by readline is dynamically allocated, we add it to our garbage collector’s list of allocated pointers using gc_add_to_allocs immediately after receiving it.

Tokenizing user input

The tokenizer takes the line from the readline function and splits it into tokens (saved as a NULL-terminated array of strings, char **). Generally, a token is delimited by whitespace. However, sections enclosed in single (') or double (") quotes are treated as single tokens. Within double quotes, $ followed by an environment variable name or ? is expanded; within single quotes, no expansion occurs. For example, the input "< file1 cat | grep -i "hello, $USER" > file2" might become {"<", "file1", "cat", "|", "grep", "-i", "hello, username", ">", "file2", NULL} (assuming $USER is “username”).

Note that with nested quotes (only possible with alternating single and double quotes), only the outer quotes determining the token boundary are removed. For example, awk '{count++} END {print count}' becomes {"awk", "{count++} END {print count}", NULL}. However, awk "'{count++} END {print count}'" becomes {"awk", "'{count++} END {print count}'", NULL} (inner single quotes preserved).

Following bash, redirection operators (<, <<, >, >>) act as delimiters even without surrounding spaces. All these are equivalent: echo hello > outfile, echo hello>outfile, echo hello >outfile, echo hello> outfile, and are tokenized as {"echo", "hello", ">", "outfile", NULL}.

Also following bash, if quoted sections directly abut unquoted text or other quoted sections without spaces, they are concatenated into a single token after quote removal. E.g., grep "hello"world becomes {"grep", "helloworld", NULL}.

If the last token is a pipe (|), our shell throws a syntax error, unlike bash which prompts for more input. Other syntax errors (like missing filenames after redirection) are typically handled by the parser.

Parsing the tokens and preparing for execution

The parser takes the char ** token array produced by the tokenizer and builds a structure representing the command(s) to be executed. We used a linked list of “command groups”, where each group corresponds to a single command between pipes (or the only command if no pipes exist). Each command group contains all information needed for execution:

- The command name and its arguments (

char **cmd_args, wherecmd_args[0]is the command name). - Whether the command is a built-in or an external program (

t_cmd_type). - The file descriptor for input (

int in_fd), defaulting tostdinbut potentially redirected to a file or the read-end of a pipe. - The file descriptor for output (

int out_fd), defaulting tostdoutbut potentially redirected to a file or the write-end of a pipe. - Pointers to the previous and next command groups in the pipeline (if any).

Our t_cmd_grp struct looked something like this:

typedef struct s_cmd_grp

{

char *cmd_name; // Command name (e.g., "ls", "grep")

char **cmd_args; // NULL-terminated array of args (args[0] is cmd_name)

t_cmd_type cmd_type; // enum (BUILTIN or EXTERNAL)

int in_fd; // Input file descriptor (0=stdin, pipe-read, file)

int out_fd; // Output file descriptor (1=stdout, pipe-write, file)

struct s_cmd_grp *previous; // Previous command group in pipeline (or NULL)

struct s_cmd_grp *next; // Next command group in pipeline (or NULL)

} t_cmd_grp;Here’s a high-level view of how the parser processes the tokens:

- It identifies the command name (usually the first token unless it’s a redirection).

- It collects subsequent tokens as arguments until a metacharacter (

|,<,>,>>,<<) or the end of the token list is reached. - When a redirection operator (

<,>,>>) is encountered, the next token is treated as a filename. The file is opened (usinggc_open), and thein_fdorout_fdof the current command group is updated. Error handling occurs if the file cannot be opened or if the filename token is missing. - When a heredoc operator (

<<) is encountered followed by a delimiter token, the shell prompts the user for input line by line until the delimiter is entered on a line by itself. This input is typically stored in a temporary file (e.g.,/tmp/minishell_heredoc). This temp file is opened (usinggc_open), its file descriptor is set as thein_fdfor the command group, and crucially,unlinkis called on the temp file immediately after opening. This makes the filename disappear from the directory listing, but the open file descriptor remains valid until closed, ensuring automatic cleanup even if the shell crashes. - If a pipe (

|) is encountered, a pipe is created (usingpipe()), theout_fdof the current command group is set to the write-end of the pipe, and parsing continues for the next command group, whosein_fdwill be set to the read-end of the pipe.

Throughout this process, syntax errors are detected (e.g., > > file, | |, redirection without filename) and reported to the user, preventing execution.

Writing the built-in commands

Our minishell included the following built-in commands:

echowith the-noptioncdwith only a relative or absolute pathpwdwith no optionsexportwith arguments (but without support for marking shell variables for export, only environment variables)unsetwith argumentsenvwith no options or argumentsexitwith an optional numeric status

Being “built-in” means that the shell’s own code executes these commands directly, instead of searching for and executing an external program from the PATH.

Each built-in corresponds to a function in our program (e.g., ft_echo, ft_cd, ft_pwd, etc., where ft refers to 42).

ft_echo: Prints its arguments separated by spaces. If the first argument is-n, no trailing newline is printed; otherwise, a newline is added.ft_cd: Uses thechdirfunction to change the current working directory. It updates thePWDandOLDPWDenvironment variables accordingly. Throws a “too many arguments” error if more than one path is given. If no arguments are supplied, it attempts to change to the directory specified by theHOMEenvironment variable.ft_pwd: Uses thegetcwdfunction to get and print the current working directory path. (Alternatively, it could print thePWDenvironment variable, butgetcwdis generally more reliable).ft_export: Without arguments, it prints the list of environment variables in a specific format (similar todeclare -xin bash, but possibly simpler in our case, perhaps matchingenv). With arguments, it attempts to add or update environment variables. Arguments should be in the formatNAME=value. It performs validity checks onNAME(must start with a letter or underscore, followed by letters, numbers, or underscores). An error is reported for invalid names or formats. IfNAMEis valid but=valueis missing, we reported an error, as our minishell didn’t support shell-local variables that could be marked for export later.ft_unset: Takes variable names as arguments and removes the corresponding environment variables. Performs validity checks on the names.ft_env: Prints the list of current environment variables (name=value pairs, one per line).ft_exit: Causes the minishell to terminate. If a numeric argument is provided (e.g.,exit 1), the shell exits with that status code (modulo 256). If no argument is given, the shell exits with the status code of the last executed command. Handles non-numeric arguments appropriately (prints an error and exits with a specific status like 2 or 1).

Executing the commands

Once the parser has built the linked list of command groups, the execution phase begins. For each command group, a child process is typically created using fork() (with exceptions for certain built-ins, see below). Within each child process:

- Redirection: If

cmd_grp.in_fdis notSTDIN_FILENO(0) orcmd_grp.out_fdis notSTDOUT_FILENO(1), thedup2function is used to duplicate the appropriate file descriptor (in_fdorout_fd) onto standard input (0) or standard output (1), respectively. Any original file descriptors used for redirection (from files or pipes) that are no longer needed in the child are closed. - Execution:

- If the command is a built-in, the corresponding

ft_function (likeft_echo,ft_pwd,ft_env) is called directly within the child process. After the built-in completes, the child process exits with an appropriate status (usually 0 for success, non-zero for failure). - If the command is external, the

execvefunction is called. This function attempts to replace the current process image (the child process) with the specified external program (found via thePATHenvironment variable). Arguments (cmd_grp.cmd_args) and the current environment variables are passed toexecve. Ifexecvefails (e.g., command not found), an error message is printed, and the child exits with a specific failure status (e.g., 127).

- If the command is a built-in, the corresponding

Important Caveat for Built-ins: Four built-ins (cd, export, unset, exit) modify the state of the shell process itself (current directory, environment variables, or termination). Running these in a child process would have no effect on the parent shell. Therefore:

- If the user enters a command line containing only one of these four built-ins (no pipes), the corresponding

ft_function is executed directly in the parent shell process. No child process is created for this command. - If these built-ins appear within a pipeline (e.g.,

export VAR=1 | grep VAR), they are executed in child processes, meaning their effect will be lost once the child exits. This matches the behavior of standard shells like bash. (Theexitcommand in a pipeline would just terminate that child process).

After potentially forking child processes, the parent shell process must:

- Close any pipe file descriptors it doesn’t need (e.g., the write-end of a pipe after forking the left-side child, the read-end after forking the right-side child). This is crucial for

EOFto propagate correctly through pipelines. - Wait for all child processes in the pipeline to terminate using

waitpid. It waits for the last command in the pipeline specifically to retrieve its exit status. - Save the exit status of the last command to make it available via

$?.

Handling Signals

Our shell needed to handle user-generated signals gracefully, primarily SIGINT (Ctrl-C) and SIGQUIT (Ctrl-\). We also naturally handled EOF (Ctrl-D), although it’s technically an end-of-file condition on input, not a signal.

The shell’s response to SIGINT and SIGQUIT depends on its current state. We used the sigaction function to set up signal handlers – functions that are called when a specific signal is received. Our set_signal_handler function was called at different points to switch between signal handling modes:

-

Interactive Mode: When the shell is displaying the prompt (

minishell$) and waiting for user input:SIGINT(Ctrl-C): Abort the current input line, print a newline, and display a fresh prompt. Do not exit the shell.SIGQUIT(Ctrl-\): Ignored. Does nothing.

-

Heredoc Mode: When the shell is reading input for a heredoc (showing a

>prompt):SIGINT(Ctrl-C): Abort the heredoc input, cancel the entire command line that contained the heredoc, print a newline, and display a freshminishell$prompt.SIGQUIT(Ctrl-\): Ignored. Does nothing.

-

Non-interactive Mode (Child Process Running): When the shell has launched one or more child processes to execute a command pipeline:

SIGINT(Ctrl-C): The parent shell ignoresSIGINTitself (soCtrl-Cdoesn’t kill the shell). The signal is passed to the foreground child process group. The default action forSIGINTusually terminates the process. The parent shell then waits for the children and eventually displays a new prompt. A newline might be printed by the signal handler in the parent for cleaner output.SIGQUIT(Ctrl-\): Similar toSIGINT, the parent ignores it, and the signal is passed to the children. The default action forSIGQUITusually terminates the process and potentially dumps core. The shell prints “Quit (core dumped)” (or similar) after the child terminates and displays a new prompt.

Setting signal handlers appropriately (e.g., using SIG_DFL for default behavior in children, or custom handler functions) at the right times (before readline, before fork, after fork in parent/child) is key to achieving this behavior.

Closing remarks

Before starting the project, it naturally felt intimidating. I had previously never programmed something of this complexity. After finishing this project, it now feels like a much more manageable challenge, albeit a complex one. The process demystified many aspects of how shells operate. I am now ready for bigger challenges, which might also initially seem intimidating or even insurmountable. However, I know that problems are inevitable, but they are also soluble.